Monitoring an Imagicle Cluster

Alarms

Replication service health can be monitored by the administrator of the cluster through the status of the following alarms visible in admin ⇒ Monitoring ⇒ Alarms:

Synchronization Activity: represents the status of synchronization operations in the local node. If a node is not ready to synchronize, this alarm isn't evaluated as it would be meaningless.

Otherwise, it shows if the node is synchronizing with the expected remote node (green), with a different node (yellow), or with no other node at all (red).Synchronization Readiness: represents whether local node is ready to synchronize its data. A node might not be ready to synchronize if, during its addition to the cluster or during the last update, it couldn't complete the [re]initialization ([re]provisioning) procedure. In this case the only way to restore its operation is to run the Imagicle setup package again, which completes the node initialization.

Time Alignment: shows whether local node and its expected remote node have the same time configuration, and their system time is the same. All the nodes times must be aligned, to allow the cluster to work correctly. If local and remote times are aligned, the alarm is green, otherwise it turns to red.

Event notifications

While the Replication Service is running, some events are notified to the cluster administrator to let him identify any potential issue.

Readiness events

When a node is not ready to synchronize its data, it reports the problem with a sync not ready notification, including the problem that prevents its readiness.

When that problem is solved, the node notifies that it's back to normal with a sync ready notification.

Synchronization events

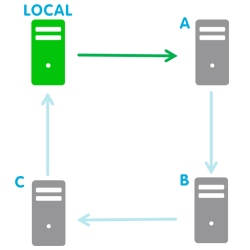

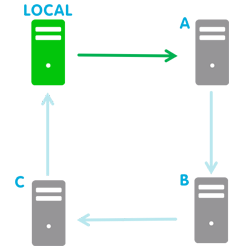

In normal conditions any cluster node is synchronized with its successor in the cluster topology.

No notification is reported until any event changes this situation.

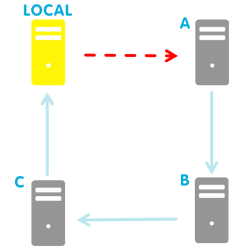

If local node fails the synchronization with its successor, a failure notification is sent.

Then the node tries to synchronize with the other nodes of the cluster and, if successful, it sends a failover notification.

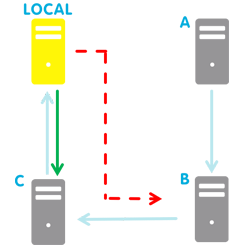

In this situation the remote node used for the synchronization will not change unless a new failure occurs.

A new failure notification is sent for each synchronization failure with a remote node, and it will report the failure reason.

A new failover notification is sent for each successful remote node change.

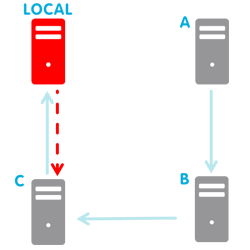

If local node fails to synchronize with every other node in the cluster, it sends a no available node notification.

After any failure, local node tries periodically to synchronize with its successor and, whenever it succeeds, it sends a failback notification to signal that its normal behaviour has been restored.

Time alignment events

When time difference between a node and its successor is too high (i.e. over 15 seconds), data replication is inhibited to prevent unexpected behaviours, and a time not aligned notification is sent.

A time aligned notification will then signal when time difference is back to normal.

Please check here for more details.